Essential Features of Creative Collaboration Platforms (what every video team needs)

Confused which collaboration platform to pick? Learn the 12 essential features creative teams need - frame-accurate review, versioning, MAM, security, automation, AI tagging, and more - plus a 6-step evaluation checklist.

What “essential” actually means for creative teams

Not all collaboration tools are created equal. For video teams, “essential” features are those that remove friction from creative work: make feedback unambiguous, keep versions tidy, let clients and freelancers join without chaos, and surface the right files quickly so editors stay in flow. Over the past two years the market evolved from simple share-and-comment players to platforms that add metadata, automation, and tighter NLE (nonlinear editor) integrations - meaning you should evaluate tools against the real daily pains your team faces.

Below are the 12 features every modern creative collaboration platform should offer, why they matter, and what to test for when you pilot a product.

The 12 essential features (and how to test them)

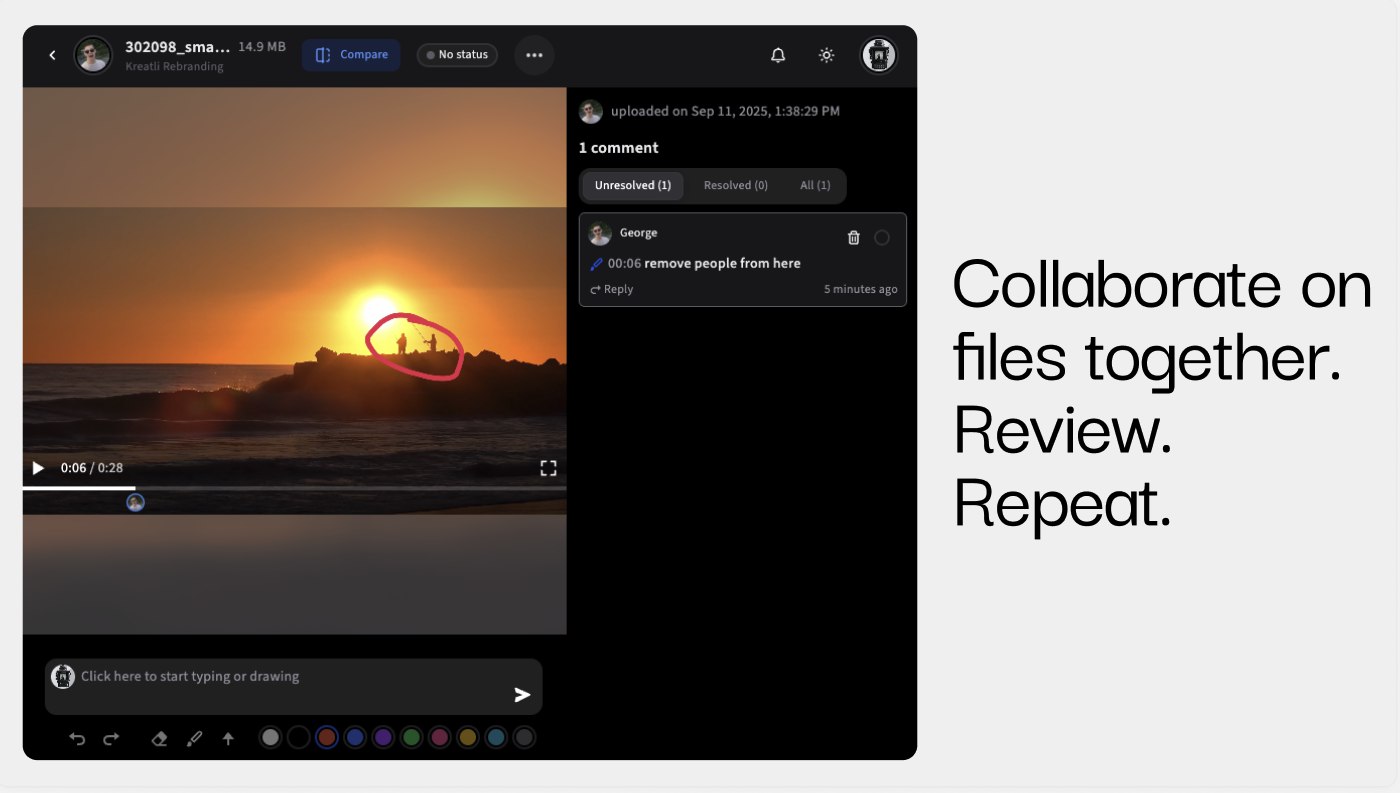

1) Frame-accurate review & markup

Why it matters: Video feedback is useless if reviewers can’t pin notes to an exact frame or timecode. Frame-accurate comments remove ambiguity and cut rework.

What to test: Ask reviewers to place three timecode-specific comments and measure how long editors take to resolve them.

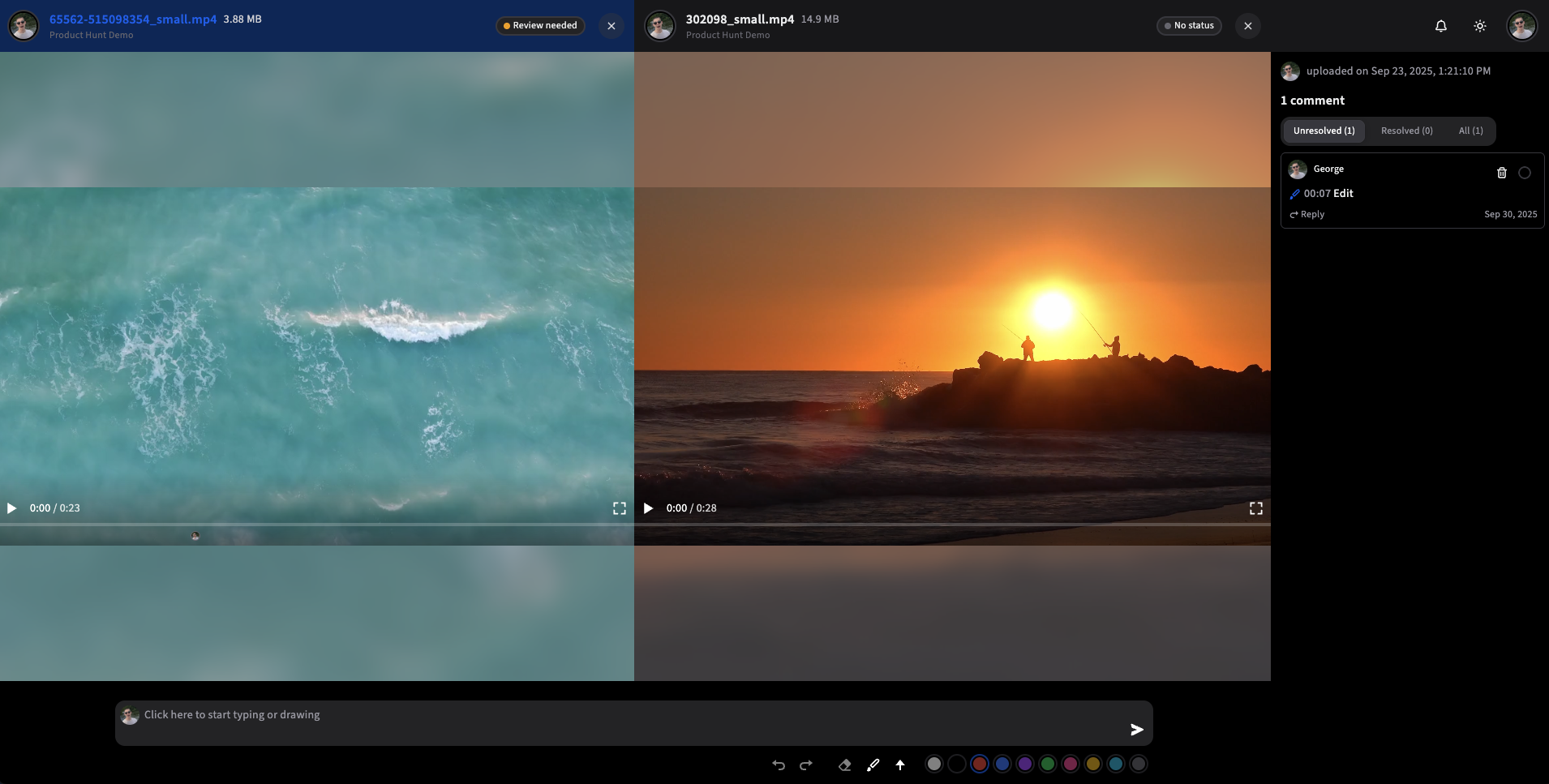

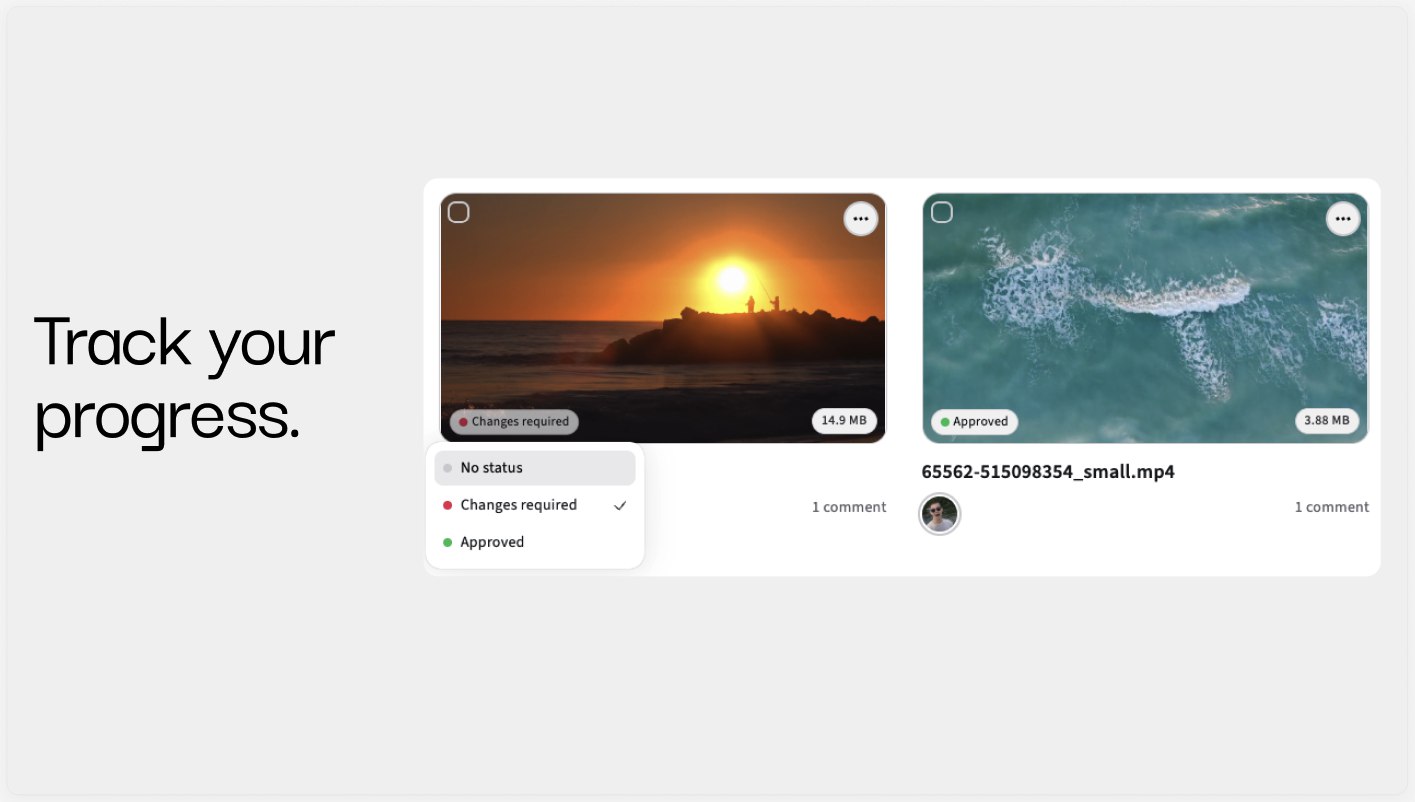

2) Version history, approval flows & audit trails

Why it matters: Teams need to know which version is final and who approved it - for billing, legal, and sanity. Robust versioning also makes rollbacks safe.

What to test: Upload sequential versions, approve one, then verify the audit log shows approver, timestamp, and change notes.

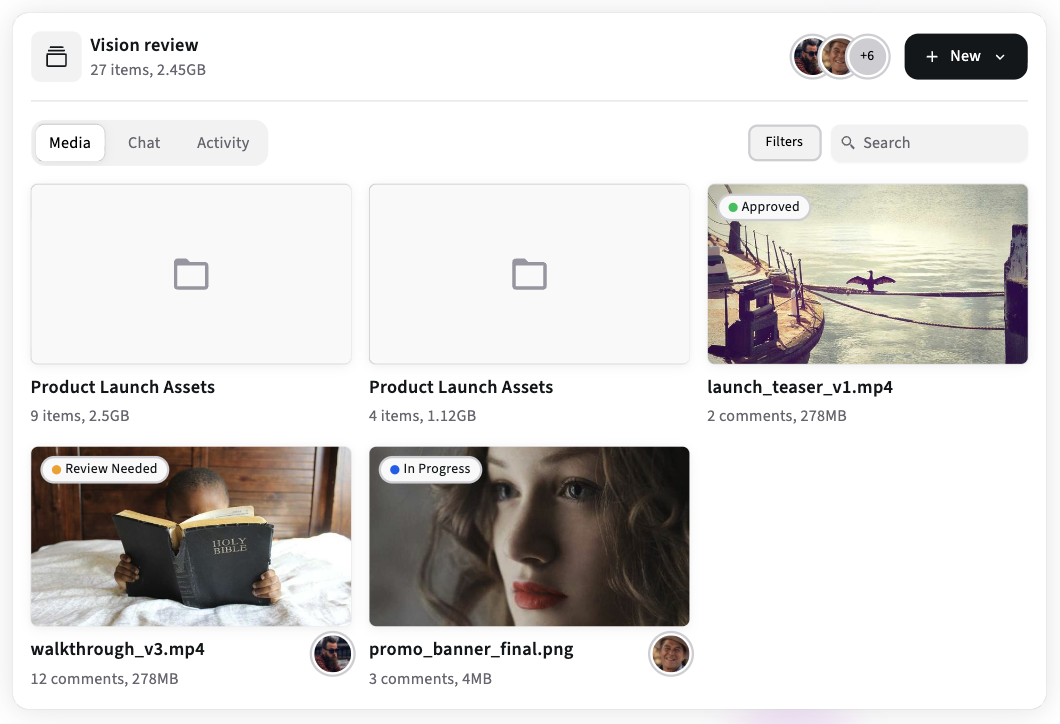

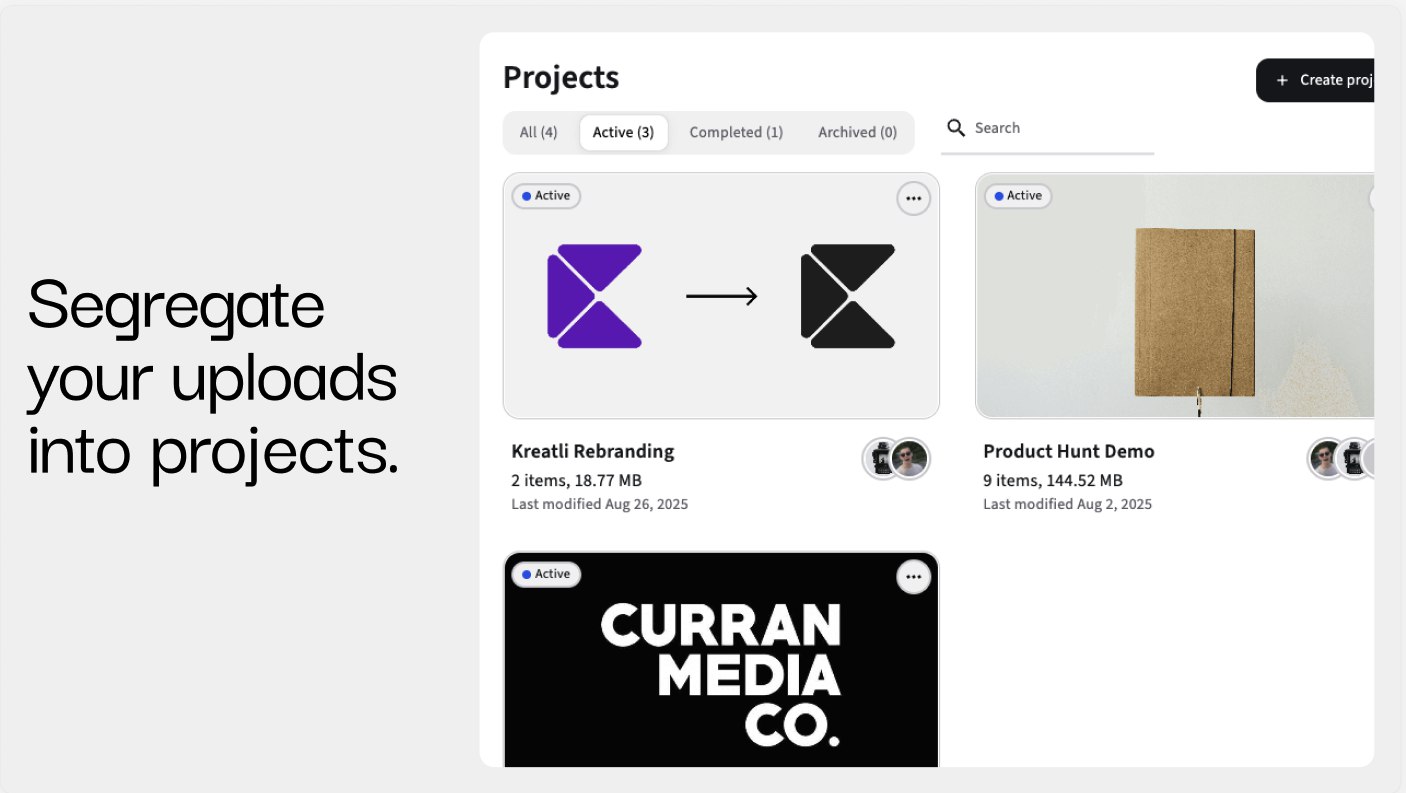

3) Project-based workspaces (contextual organization)

Why it matters: Grouping files, chats, comments, and milestones around a project preserves context and reduces hunting across apps.

What to test: Create a project, invite collaborators, add files + chat messages, and confirm everything stays scoped to that workspace when you later filter or export.

4) Integrations with NLEs, cloud storage & collaboration apps

Why it matters: Editors shouldn’t be forced to export awkward formats or copy links manually - deep integrations (Premiere, Resolve, Creative Cloud, Google Drive, Dropbox, Slack) speed workflows and reduce errors.

What to test: Try a roundtrip: send a clip from your NLE, collect comments, and import notes back into the editing timeline.

5) Media asset management (MAM) & metadata search

Why it matters: When your library grows, advanced metadata, searchable tags, and AI auto-tagging turn a random dump into an accessible archive. Teams can re-use footage and avoid recreating lost assets.

What to test: Upload a batch of clips, tag via metadata or let the tool auto-tag, then search on obscure attributes (location, speaker, keyword).

6) Storage, proxies & streaming performance

Why it matters: High-res video is heavy. Good platforms stream proxies, generate lightweight previews, and let editors work without downloading full files every time.

What to test: Upload a 4K master, then verify playback smoothness in the browser and whether the platform offers proxy generation or adaptive streaming.

7) Permissions, client access & guest reviewer flows

Why it matters: Clients and freelancers need access to specific content without seeing internal roadmaps or hidden files. Granular permission controls prevent accidental leaks and simplify onboarding.

What to test: Invite a guest reviewer with limited access, confirm they can review and comment but not see other projects or billing info.

8) Automation & routing (workflows)

Why it matters: Manual routing wastes time. Automation (auto-assign reviewers, enforce approval sequences, notify stakeholders on changes) keeps projects moving predictably - especially in enterprise setups.

What to test: Configure a simple workflow: when Version X is uploaded, notify person A, then after approval notify person B. Confirm the sequence runs without manual intervention.

9) Audit, reporting & analytics

Why it matters: Visibility into review times, bottlenecks, and reviewer responsiveness helps managers optimize processes and prove ROI to clients.

What to test: Pull a report showing average time-to-approval per project and a breakdown of comments per reviewer.

10) Security & compliance (encryption, SSO, retention policies)

Why it matters: Agencies and brands handle sensitive material; enterprise customers require SSO, role-based access, retention rules, and data residency options.

What to test: Ask for security documentation (SOC2/ISO/GDPR compliance), enable SSO in a test org, and confirm audit logs capture access events.

11) Mobile/on-set support & Camera-to-Cloud (C2C)

Why it matters: Faster turnarounds come when footage can be uploaded directly from set to the review workspace.

What to test: Upload from a mobile device or on-set tablet and verify the file appears as a proxy for remote reviewers in minutes.

12) AI features (auto-tagging, transcription, smart highlights)

Why it matters: AI can auto-generate transcripts, tag people/objects, surface “best takes,” and accelerate search — turning an archive into an active asset base. Several MAM vendors and modern platforms now include AI metadata features.

What to test: Run a clip through the platform’s AI tools and inspect the accuracy of auto tags, speaker separation in transcripts, and tag discoverability.

Quick comparison (which platforms excel at which features)

Feature | Good examples to try |

|---|---|

Frame-accurate review | Kreatli, Frame.io, Filestage |

Automation & routing | Ziflow |

MAM & AI tagging | Iconik, Frontify, dedicated MAMs |

Camera-to-Cloud / on-set uploads | Frame.io |

Lightweight all-in-one (chat + files + reviews) | Kreatli, Filestage (for agency flows) |

(Use this table as a quick map when you shortlist tools.)

Conclusion - summary & next step

Creative collaboration tools are only valuable when they solve real daily frictions: unclear feedback, lost versions, slow approvals, and unrecoverable assets. When you evaluate platforms, prioritize frame-accurate review, versioning and approvals, project-scoped workspaces, integrations to your NLEs and storage, MAM/search, and the security/automation features your team actually needs. Run a short pilot using the 6-step checklist above and measure review rounds and onboarding friction - the right tool will save hours per project, not just add another login. If you want, I can now convert this article to HTML with schema JSON-LD, create the infographic assets, or draft the social copy for launch. Which would you like next?

Start a free Kreatli project

Test frame-accurate reviews and project workspaces today.